After finding a heuristic function and an evaluation function, another way for finding the target-state is iterative improvement.

These algorithms are able to solve problems where the state space is continuous. Also when we don’t know the characteristics of the target-state

, but we have some evaluation function

(potential field) to minimize. These methods scale well to high dimensions where no other methods work. They also don’t require high storage.

We start from the start-state and pick the best neighboring state

, then, from

, pick the best neighboring state

and so on… Until, we reach a state

that is better than all its neighbors. Then we halt.

hill_climbing(start) u = start while true v = minimum valued successor of u if (f(v) >= f(u)) return u u = v

However, it may halt at a local minimum.

Runs Steepest Descent multiple times from random start states.

random_hill_climbing() min = random node S = a list of random start nodes for each node u in S v = hill_climbing(u) if f(v) < f(min) min = v return min

This algorithm lowers the chances of halting at local minimum.

From the current state , it picks the next state

randomly. If

gets us lower heuristic (good), we proceed to

. If not (bad), we are supposed to be at a local minimum. Try to escape the local minimum

with some probability. The more bad

is compared to

, the more likely we’re going to stick with our old state

.

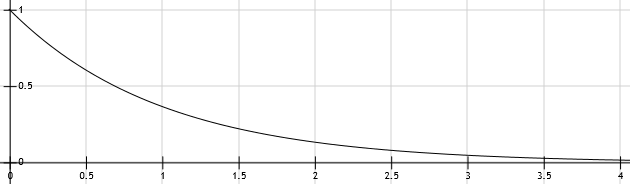

simulated_annealing(start, schedule) u = start for t = 1, 2, ... T = schedule(t) if T = 0 return u v = random successor of u delta = f(u) - f(v) if (delta > 0) u = v else u = v with probability e^(delta/T)

The parameter controls the speed of convergence.

should be decreasing to make sure the algorithm is more likely to halt the more it proceeds.

This means: escape local minima by allowing some bad moves, but gradually decrease their frequency.

2 thoughts on “Heuristic Search & AI – 3”